需求

需要做卷迁移的工作,从一个ceph集群迁移到另一个集群,因此需要配置两个ceph后端。由此开展后续工作,将配置过程及出现的问题做一记录。

另外两套ceph后端的访问用户都是cinder用户,网上找的资料均为两个用户,当为同一用户时,需要增加一些额外配置,特此说明

环境

服务器:centos 7.9

openstack cinder:stein

ceph:nautilus

cinder配置文件

在cinder-volume所在节点,修改/etc/cinder/cinder.conf文件,主要修改内容如下:

enabled_backends = rbd,rbd232 # 开启两个后端

# 原本配置的后端

[rbd]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_cluster_name = ceph

rbd_ceph_conf = /etc/ceph/ceph-cinder.conf #

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

rbd_secret_uuid = b3499aaf-b1f0-4085-b8f4-d23d2c0027af

max_over_subscription_ratio = 20

volume_backend_name = rbd

# 新增后端

[rbd232]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_cluster_name = ceph

rbd_ceph_conf = /etc/ceph/232ceph-cinder.conf # 新增后端的配置文件

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

#rbd_secret_uuid = b3499aaf-b1f0-4085-b8f4-d23d2c0027af # 用来配置libvirt访问ceph的秘钥,本次不涉及未配置

max_over_subscription_ratio = 20

volume_backend_name = rbd232 # 名称自定义

rbd_keyring_conf = /etc/ceph/232ceph-cinder.client.cinder.keyring

这里的rbd_keyring_conf在使用迁移卷的功能时需要,否则keyring会是None,导致连不上ceph集群。

报错栈如下:

2023-02-03 15:28:58.142 29763 ERROR os_brick.initiator.linuxrbd PermissionError: [errno 1] error connecting to the cluster

2023-02-03 15:28:58.142 29763 ERROR os_brick.initiator.linuxrbd

2023-02-03 15:28:58.144 29763 DEBUG os_brick.initiator.connectors.rbd [req-a13ac8ca-da18-46e1-b17c-104476292e75 bf3ab6a404d54c1092bf4dfdc7d1fe95 3b8e0f7a3d194d4083ce5226749ae472 - default default] <== connect_volume: exception (73ms) BrickException(u'Error connecting to dms cluster.',) trace_logging_wrapper /usr/lib/python2.7/site-packages/os_brick/utils.py:156

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager [req-a13ac8ca-da18-46e1-b17c-104476292e75 bf3ab6a404d54c1092bf4dfdc7d1fe95 3b8e0f7a3d194d4083ce5226749ae472 - default default] Failed to copy volume b4511a73-1c79-4f7b-a9fc-1255af36e38d to 07ddc83b-232b-43ba-9aed-1d6784306b50: BrickException: Error connecting to dms cluster.

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager Traceback (most recent call last):

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/cinder/volume/manager.py", line 2228, in _migrate_volume_generic

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager self._copy_volume_data(ctxt, volume, new_volume, remote='dest')

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/cinder/volume/manager.py", line 2108, in _copy_volume_data

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager attach_encryptor=attach_encryptor)

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/cinder/volume/manager.py", line 2039, in _attach_volume

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager attach_info = self._connect_device(conn)

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/cinder/volume/manager.py", line 2003, in _connect_device

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager vol_handle = connector.connect_volume(conn['data'])

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/os_brick/utils.py", line 150, in trace_logging_wrapper

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager result = f(*args, **kwargs)

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/os_brick/initiator/connectors/rbd.py", line 204, in connect_volume

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager rbd_handle = self._get_rbd_handle(connection_properties)

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/os_brick/initiator/connectors/rbd.py", line 123, in _get_rbd_handle

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager rbd_cluster_name=str(cluster_name))

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/os_brick/initiator/linuxrbd.py", line 60, in __init__

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager self.client, self.ioctx = self.connect()

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager File "/usr/lib/python2.7/site-packages/os_brick/initiator/linuxrbd.py", line 89, in connect

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager raise exception.BrickException(message=msg)

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager BrickException: Error connecting to dms cluster.

2023-02-03 15:28:58.145 29763 ERROR cinder.volume.manager

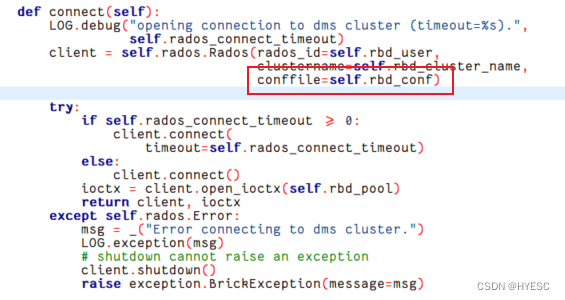

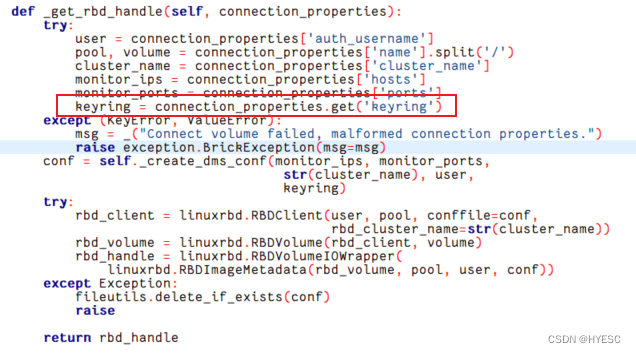

源码简单分析如下,报错的最后落脚点在os_brick/initiator/linuxrbd.py,也就是下边的代码,红框圈出来的是配置文件,是个临时路径,在/tmp/目录下

根据报错信息,往上定位该文件生成位置os_brick/initiator/connectors/rbd.py

图上的conf就是生成的临时配置文件路径,其中的keyring是None,至此想到rbd_keyring_conf这个参数可能是在这里生效的,配置后不出所料,迁移卷时不再报错。再深入的代码没有分析。

配置ceph-cinder.conf

对新的后端配置/etc/ceph/232ceph-cinder.conf,内容如下:

[global]

fsid = xxxxxxx

public_network = 192.168.111.0/24

cluster_network = 192.168.1111.0/24

mon_initial_members = executor

mon_host = 192.168.111.112

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon_max_pg_per_osd = 1000

osd_crush_update_on_start = false

[client.cinder]

keyring = /etc/ceph/232ceph-cinder.client.cinder.keyring

核心点在[client.cinder]配置项,这样才会在指定的文件中拿到keyring从而正确访问集群。否则会报错如下:

Error connecting to ceph cluster.: PermissionError: [errno 1] error cluster 权限问题导致无法连接ceph集群。

这里的配置和cinder.conf中的配置二者起作用的方式不同,因此均需配置

结束

重启cinder-volume,命令

systemctl restart openstack-cinder-volume

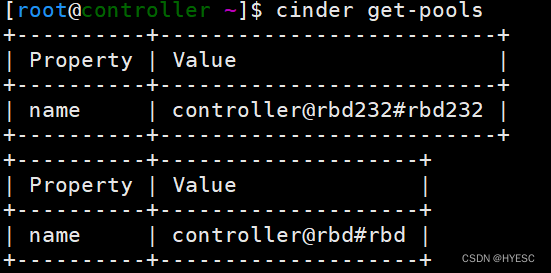

查看后端是否增加,命令

cinder get-pools 发现已经是两个了

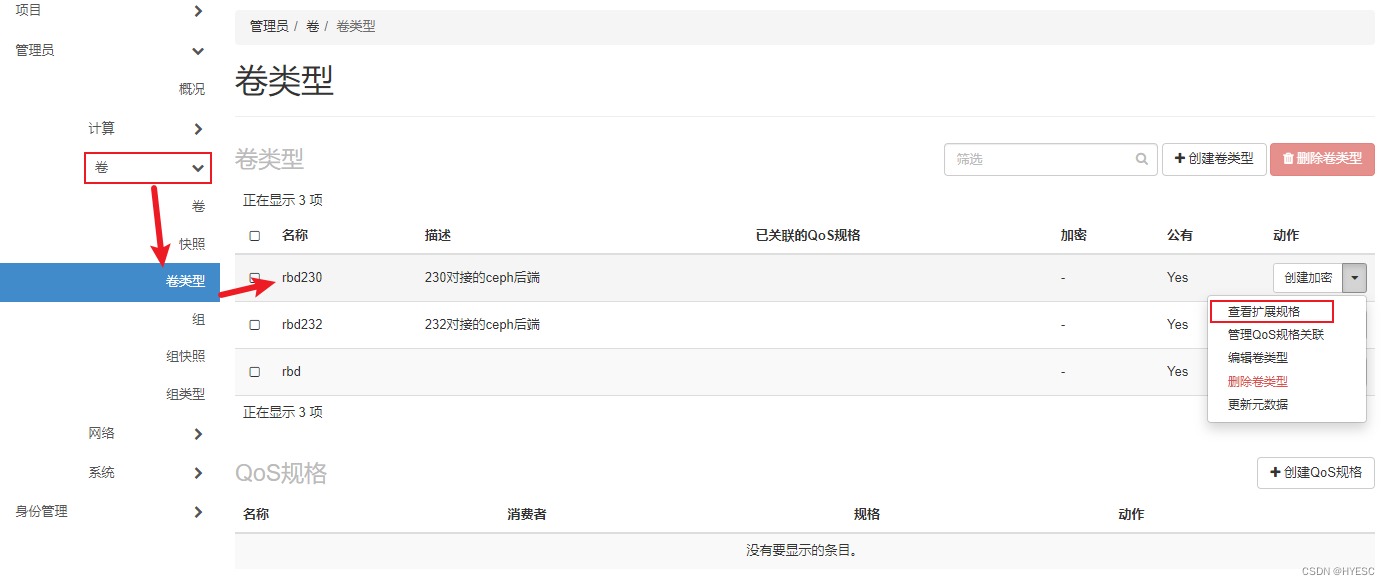

关于卷类型与存储后端的一点认识

在配置好上述两个存储后端后,测试时发现使用之前的卷类型创建卷,也会跑到rbd232环境中创建卷,至此研究了一下卷类型和存储后端的关系。

首先要看下cinder.conf配置文件的中的几个参数

enabled_backends:要使用的后端名称列表,上边例子中的 rbd,rbd232,要在文件中定义这两个后端,即[rbd]和[rbd232]

default_volume_type:默认使用的卷类型(volume type),也就是原生页面中,管理员-卷-卷类型 中定义的。配置文件设置该参数,也要在实际创建该卷类型

volume_backend_name:该参数所处位置参考上边的cinder.conf文件。解释,驱动程序的后端名称,可以和enabled_backends中不一致,自定义随便起

出现上边说的问题是因为原本存在的卷类型rbd,没有实际关联特定存储后端,因为只有一个存储后端,scheduler调度时,只能选择这一个后端进行卷的创建,当有两个存储后端时,调度器就会在两个中调度更适合的,然后发现rbd232后端更适合创建,就全部都建到了232上。

当我考虑给rbd卷类型添加扩展规格或元数据时,发现无法修改,提示卷类型状态是 “in-use”,因为之前创建的卷正在使用中,不能修改。

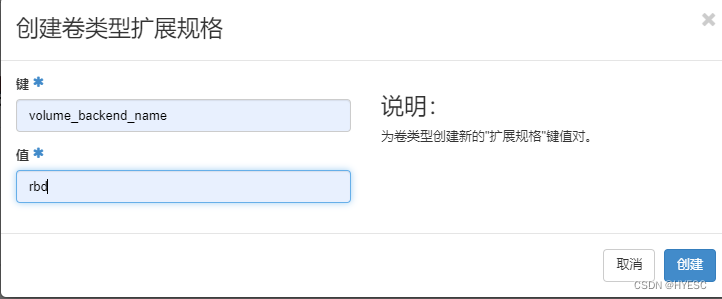

问题解决方法参考:新建一个卷类型,如rbd222,然后将其添加扩展规格(volume_backend_name=rbd,就是这个参数将卷类型与实际的存储后端关联起来了,这里写rbd是因为之前的存储后端定义的volume_backend_name就是rbd,参考上文中的cinder.conf中[rbd]区域),最后修改cinder.conf中default_volume_type=rbd222,重启

systemctl restart openstack-cinder-api # 修改cinder.conf中的default_volume_type需要重启api才能生效

systemctl restart openstack-cinder-volume

systemctl restart openstack-cinder-scheduler

这样选择创建卷时选择rbd222卷类型,创建的卷就都在原来的存储上了。

关联过程图示

通过以上知道,创建卷类型后,要及时关联存储后端,即便只有一个存储后端,也要进行关联,防止后期出现更多后端时,导致不必要的麻烦

参考

https://medium.com/walmartglobaltech/deploying-cinder-with-multiple-ceph-cluster-backends-2cd90d64b10

https://www.yisu.com/zixun/252458.html

https://docs.openstack.org/cinder/stein/configuration/block-storage/drivers/ceph-rbd-volume-driver.html

https://blog.csdn.net/weixin_40579389/article/details/120875159